20 years of algorithm updates reveal a pattern that gives us a clear picture of how Google is trying to change SERPs moving forward.

How does your business adapt to the constantly changing organic search landscape?

Google is making changes to search at an ever-increasing rate. In 2010 Google made about 400 algorithm changes throughout the year, but in 2018 they made over 3,200 updates at a rate of about 9 per day. These algorithm changes vary in scope and scale and it’s important to track these updates because they can cause significant fluctuations in your rankings.

Depending on how prepared and responsive you are to Google’s changes, an update has the potential to significantly help or hurt your rankings. While it’s impossible to predict what specific changes Google will make in the future, 20 years of algorithm updates reveal a pattern that gives us a clear picture of how Google is trying to change SERPs moving forward.

To help you understand how the search landscape is changing and what you should do to prepare for future Google algorithm updates, we’ve created this in-depth guide. Learn what a Google algorithm is, the types of algorithm updates, how to track algorithm updates, and what you should do to prepare for future search engine changes.

What is a Google algorithm update?

Google’s ultimate goal is to provide the best, most relevant results for a person when they search for something. To achieve this, Google has a secret algorithm that uses a combination of ranking factors to determine which sites they should show and in what order. Although there is one main Google algorithm, it uses data from an unspecified number of micro-algorithms that are constantly being adjusted.

Google algorithm updates are tweaks and refinements to the main and micro-algorithms that adjust the importance of ranking factors. There are hundreds of ranking factors, of which some are proven and others are speculated. These ranking factors can vary from concepts such as having an ideal on-page word count, to getting a certain amount of high-quality websites to link back to you (known as backlinks). Some ranking factors are more important than others, but most of this is speculation based on experience and results amongst fellow SEO experts.

Google doesn’t like to disclose information about how they rank sites because they don’t want people trying to game the system, which could give lower-quality sites with shady tactics an advantage over sites that would actually be more relevant to a user. As a result, Google typically only shares details about major algorithm updates.

Types of Google algorithm updates

In general, there are three types of Google algorithm updates that vary based on the expected impact they’ll have.

Major Updates

Major updates have a specific purpose and some even warrant the creation of a new micro-algorithm. These updates often have fun names such as “Panda” or “Penguin” and are implemented to address a specific issue such as duplicate content or link spam. In many cases, Google will actually give webmasters advance notice of these changes so they have adequate time to update their site. For example, in June 2020 Google provided information on how Core Web Vitals will be an important ranking factor in 2021. These updates are few and far between, with usually one or two occurring each year.

Broad Core Updates

Whereas major updates are implemented to address a specific concern and involve adjusting micro-algorithms, broad core updates are more general in nature and usually involve an adjustment to Google’s main algorithm. Although you’d think an adjustment to the main algorithm would have a larger impact than updates to the micro-algorithms, a broad core update typically just means that Google has changed the importance of a few ranking factors. For example, they may decide that page load speed and backlink quality should be considered more important than content word count. Broad core updates typically occur 3-4 times a year.

Minor Updates

Minor updates are the ones that happen on a daily basis without you realizing it. There are thousands of these each year, and most are slight refinements of micro-algorithms to help improve the relevancy of search results.

How to track Google algorithm updates

Major and broad core algorithm updates have the potential to cause significant fluctuations in your rankings which in turn can affect your traffic, conversions, and revenue. Thus, it’s a good idea to stay in the loop on the latest search news to make sure you’re aware of any recent or upcoming changes. In addition to learning how you should prepare and respond to algorithm changes, you’ll also gain insight on SEO best practices.

To make sure you’re aware of the latest algorithm changes, here are a few resources you should utilize:

1. SEO Blogs

One of the best ways to stay in the loop on algorithm updates is to periodically check SEO blogs. In addition to information on recent and upcoming algorithm updates, you’ll likely get expert tips and advice on a range of other search-related subjects. Get info straight from the source by following Google’s Webmaster Central Blog, or check out other popular SEO blogs such as Search Engine Journal, Search Engine Land, or The SEM Post.

2. Twitter

If you’re on Twitter, fill your feed with SEO news and advice from industry experts. If you aren’t sure who to follow, check out this list of some of the top SEO experts on the platform. Start by following a lot of industry leaders, then narrow it down to your favorites later on. If you find yourself consistently enjoying articles from a particular author, give them a follow as well.

3. SEMrush Sensor

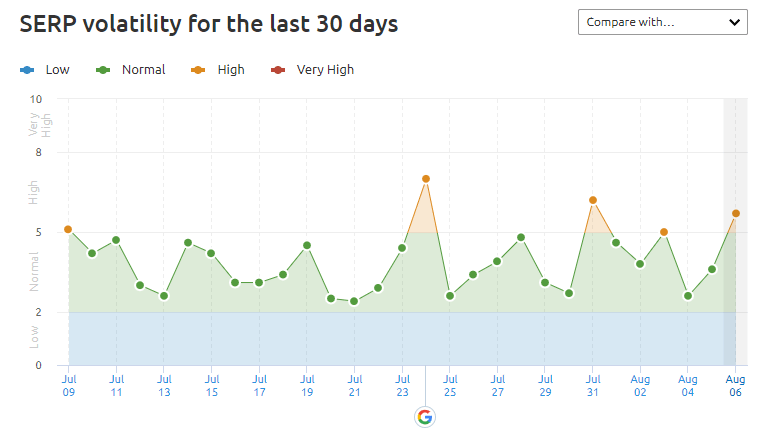

The SEMrush Sensor shows the volatility of search rankings over time to indicate if there may have been a recent algorithm update. This great tool offers a range of customization options to dive deeper into data. You can look at volatility by site category, device type, and SERP feature among other things. The tool even has a “Winners and Losers” analysis that shows which industries benefited and suffered the most from recent SERP changes.

4. SEJ History of Algorithm Updates

For historical data on algorithm updates, Search Engine Journal has a breakdown of all important updates since 2003. You can see when each update occurred, along with a brief summary of its purpose and blog posts that go more in-depth on these changes. This page is constantly updated as new major and broad core algorithms go into effect.

How to check if you’ve been impacted by an algorithm update

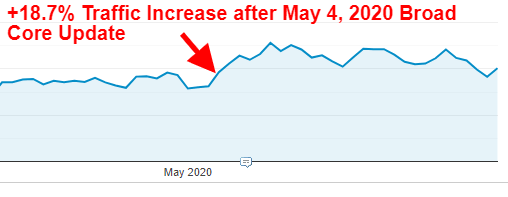

An algorithm update may or may not affect your business. If you notice a sudden and significant increase or decrease in performance, check to see if there was a recent algorithm update. If you hear about an algorithm update and are curious if this has impacted your site, start by going into Google Analytics and seeing if there were any notable increases or decreases around the time of the change.

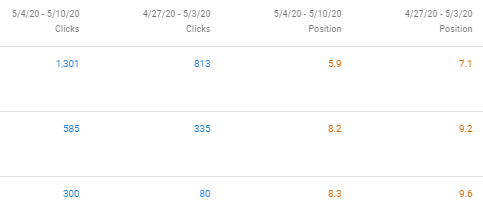

For example, we noticed the May 4, 2020 broad core update led to an immediate +18.7% increase in organic traffic on the day of the update for one of our clients, and by the end of the week we were seeing a +57.8% spike in traffic compared to before the update.

It can take some time for the algorithm update to figure out exactly how pages should be re-ranked, so don’t jump into Google Search Console (GSC) right away because position data is unlikely to be fully accurate at this point. Wait a few days or a week and then check to see if there were any notable position changes that led to the increase or decrease in traffic.

In the example from above, we looked at GSC a week after the update and found position gains for several terms led to a massive increase in clicks.

7 SEO best practices to prepare for an algorithm update

In a blog post regarding how to prepare for and respond to algorithm changes, Google stated, “We suggest focusing on ensuring you’re offering the best content you can. That’s what our algorithms seek to reward.”

At the end of the day, Google wants brands to focus more on improving the user experience and content relevancy than chasing algorithm updates. Unless Google clearly outlines what they want webmasters to adjust in preparation for a major update, the best way to prepare for an algorithm update is simply to use these SEO best practices.

1. Create high-quality content that’s relevant to your audience

Writing great content that’s relevant to your audience is the best way to establish authority in your niche and future-proof against algorithm updates. Make sure each page on your site has a specific purpose and ensure the on-page content provides clear and helpful information to users.

If you don’t have a blog, start one and focus on writing content that would be appealing and helpful for your audience. The mantra of SEO is “content is king” and this is because a good blog enables you to rank for relevant keywords that drive new traffic to your site. For example, an online plumbing parts retailer who writes a guide on how to fix a common plumbing issue may receive an influx of traffic from people looking to DIY the project. The plumbing retailer could incorporate links to the parts people will need to complete the project, which will drive conversions and revenue.

Furthermore, consistently writing about subjects related to your industry sends a signal to Google that you are an expert which helps you rank higher for relevant keywords. When an algorithm update occurs and rankings shift, having a wealth of content that solidifies you and your brand as an industry expert will help put your site in a good position to retain or even improve your rankings.

2. Update or prune outdated, thin, and duplicate content

When it comes to content, the focus should be on quality over quantity. It’s better to have one great piece of content that ranks high as opposed to five mediocre pages who rank low in SERPs.

Pruning a tree refers to trimming small or dying branches to sustain and encourage growth among flourishing branches. Similarly, content pruning involves updating or removing underperforming content to help encourage growth among your most important pages.

Every few months, take a look at your content and see which pages have declined or had limited growth. Common causes for these issues are:

- Thin content

- Outdated content

- Duplicate content

Address these issues by updating, consolidating, or removing pages based on the specific issues you come across. Google algorithms are constantly being refined to remove poor pages from search results, so make sure you’re updating or removing lesser pages so Google only sees high-quality content from your site.

3. Ensure your site is easy to navigate

Does your website taxonomy make sense? A good user experience is essential for ranking well, so make sure your site structure makes sense for both users and crawlers. This means that users who’ve never been to your site should be able to easily find what they’re looking for through your on-site menus, footer, and internal links. You should also be using a well-organized sitemap to help crawlers find important pages throughout your site.

URL structure is also an important part of taxonomy, so use clear and concise URLs as opposed to random character strings that most CMS platforms have enabled by default. For example, using “/how-to-prepare-for-a-google-algorithm-update” makes a lot more sense than “1qfgRwKDo9mT_dxkuvu9FZTPO3xEJW4o4k”. Google also says that long URLs may intimidate users, so try to keep it short and simple rather than using the full title of your article if it’s overly lengthy.

4. Make sure your site is using HTTPS

HTTPS encryption helps secure your website and this has been an important ranking signal since 2014. If you aren’t sure if your site is using HTTPS, check the URL and look for a lock icon.

A lock signal means your site is using HTTPS and no further action is needed. If you don’t have the lock signal, you’ll need to install an SSL certificate. Once this is installed, every page on your site will be secure including any future pages you create.

As more and more sites implement HTTPS encryption, sites that stick with HTTP will be at a disadvantage due to a perceived lack of security in the eyes of Google. Even if your page does somehow rank without HTTPS encryption, users who click may be wary to proceed on a site that is not secure which can negatively impact your conversions.

5. Perform technical check-ups

It’s a good idea to check every couple of months to ensure you don’t have any glaring technical issues that are hurting your site. A full technical audit typically takes several weeks or even months depending on the complexity of your site, so most organizations only do this every couple of years. However, you should do technical check-ups every couple of months to identify new issues that may have popped up recently.

If you aren’t sure where to start, follow this essential mini-tech audit checklist:

- Fix crawl and schema errors noted in GSC

- Check to see if important pages are blocked in robots.txt

- Remove non-important pages from your sitemap

- Update broken links

- Remove unnecessary redirect chains

- Ensure images are using alt text

- Update non-indexable canonicals

- Ensure your pages are using proper heading tags

- Revise metadata for low CTR pages (and address short/long metadata issues)

A solid technical foundation will help your site succeed as future algorithm updates roll out, and you may notice higher conversions from a better user experience.

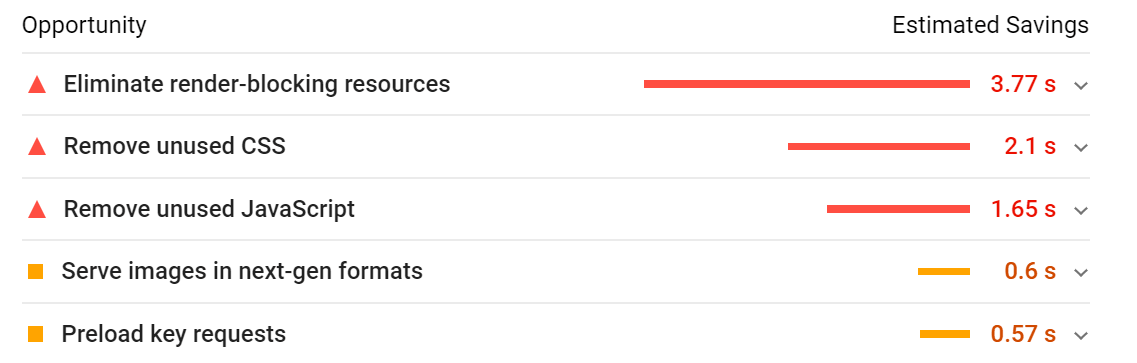

6. Work on improving site speed

Data suggests that 57% of visitors will leave a site if it takes more than three seconds to load, and 80% of these visitors will not come back to the same site again. Load times have been a ranking factor since 2010, with Google giving preference to sites that are fast and responsive. If you’re curious how Google views your website, take a look at their free PageSpeed Insights tool which gives your site a score on both mobile and desktop along with recommendations to help improve your site speed.

Common issues include large images, unnecessary code, and several other things you can address to improve the user experience for your site.

Much like fixing technical issues, increasing your site speed will help improve the user experience which will serve you well with future algorithm updates.

7. Disavow spammy backlinks

Getting backlinks from high-quality sites will help your pages rank better, as Google sees this as an indicator that your site has valuable information. Not all links are equal though, as you may find that your site gets an influx of irrelevant backlinks from spammy sites which can actually hurt your rankings.

To prevent spammy backlinks from hurting your rankings, you should check the backlinks you’ve gained every few months to determine if there are any that you should disavow. Google provides detailed instructions on how you can disavow these links, essentially telling Google not to associate these irrelevant links with your site. Do this periodically, or if you experience a sudden rankings drop and think it may be related to an influx of poor backlinks.

Prepare your site for future Google algorithm updates

Based on historical data, we know Google is going to keep updating their algorithm to put the best, most relevant results in front of users. Hopefully you gained some valuable insights on practices you can do to ensure your site is prepared for future updates, but if you aren’t well versed in SEO and this seems a bit complex — you may want to consider working with an outside expert.

Effective Spend has a team of experienced SEO professionals who can help improve the foundation and maximize the ranking potential of your site using a combination of content, technical, and link building strategies. Learn more about our capabilities on our SEO page or contact us for a consultation.